I have spent the whole February visiting professor Roger Azevedo and his team at North Carolina State University. Roger Azevedo is a professor at the Department of Psychology, and he and his team are studying cognition, metacognition, motivation and affect in the context of self-regulated learning in computer-based learning environments. The SMART lab and LET share many similarities in the focus of research, and there has been collaboration between the two teams for years now, as both teams aim to understand learning processes using advanced technologies (e.g. virtual learning environments, physiological sensors).

The focus of my PhD studies is related to exploring patterns of regulation in collaborative learning situations and in my recent work I have been struggling with integrating heart rate measures collected during collaborative learning with video data coding, so it seemed like the perfect destination to deepen my understanding of using multimodal methods in learning research. The visit was also an exciting opportunity to gain an insight into the daily life at SMART lab.

During my stay there I had the chance to discuss best practices and current problems in analyzing multimodal data with the SMART team members (who were indeed smart!), and I also had a chance to present my work and get valuable feedback.

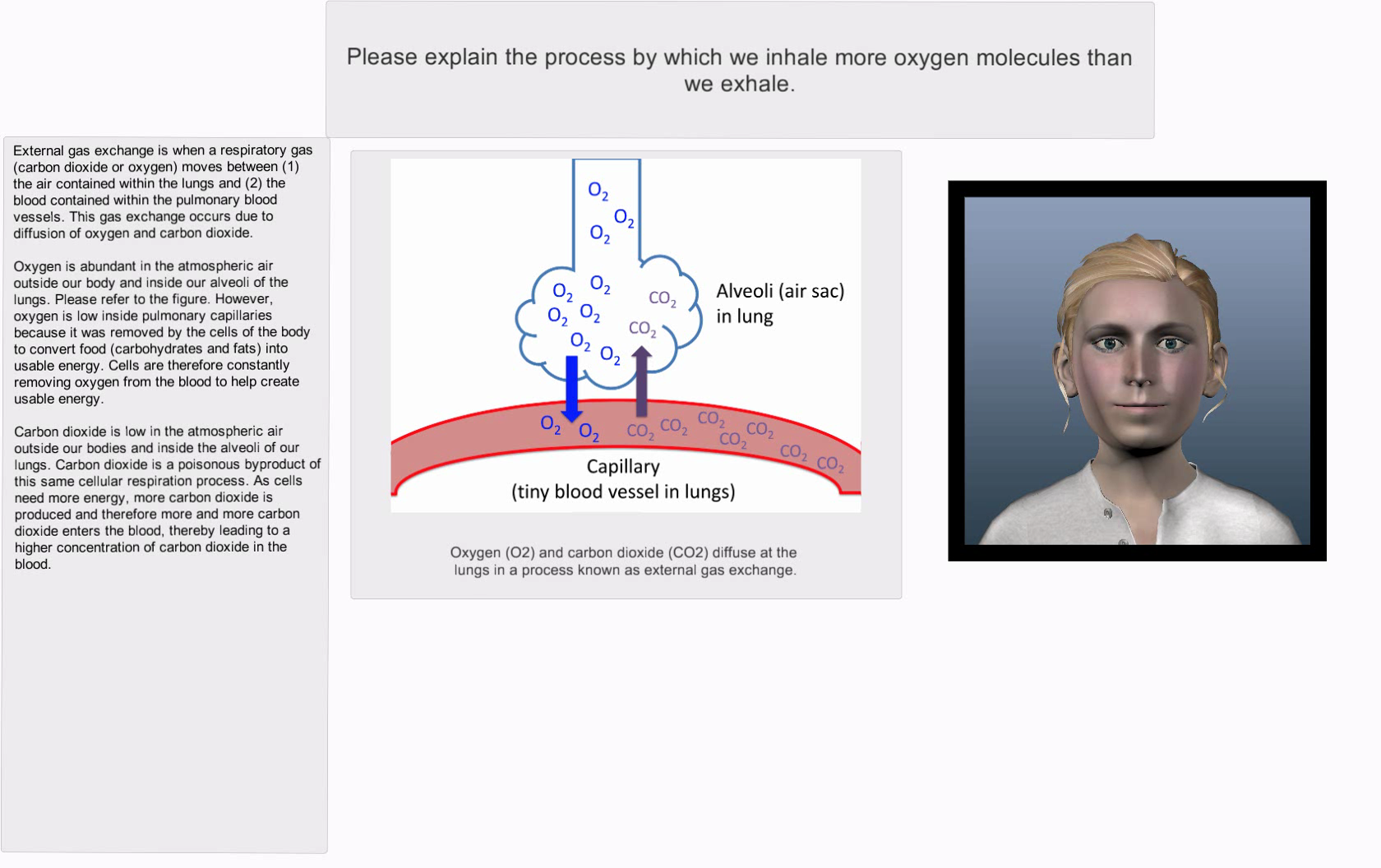

At the time of my visit there were several on-going data collections. One was related to Metatutor IVH, which uses an Intelligent Virtual Human in order to help students determine whether or not the content they are reading is useful for them or not. Another data collection was in Cary Acadamy, a local private school, where high school students were asked to complete a gamified STEM task in virtual reality developed by Lucid dream. It was really interesting to see how intuitively the students oriented themselves in the environment and how excited both the teachers and students were (not to mention the researchers). For more information about ongoing projects at SMARTLAB see here: https://psychology.chass.ncsu.edu/smartlab/#projects

The focus of my PhD studies is related to exploring patterns of regulation in collaborative learning situations and in my recent work I have been struggling with integrating heart rate measures collected during collaborative learning with video data coding, so it seemed like the perfect destination to deepen my understanding of using multimodal methods in learning research. The visit was also an exciting opportunity to gain an insight into the daily life at SMART lab.

During my stay there I had the chance to discuss best practices and current problems in analyzing multimodal data with the SMART team members (who were indeed smart!), and I also had a chance to present my work and get valuable feedback.

At the time of my visit there were several on-going data collections. One was related to Metatutor IVH, which uses an Intelligent Virtual Human in order to help students determine whether or not the content they are reading is useful for them or not. Another data collection was in Cary Acadamy, a local private school, where high school students were asked to complete a gamified STEM task in virtual reality developed by Lucid dream. It was really interesting to see how intuitively the students oriented themselves in the environment and how excited both the teachers and students were (not to mention the researchers). For more information about ongoing projects at SMARTLAB see here: https://psychology.chass.ncsu.edu/smartlab/#projects

As VR equipment is becoming more readily available and affordable, it is a very topical question how we can use it to better understand learning. One of the unique aspects about collecting data in a vr environment is that it is possible to easily collect data about where the users looked, what they “touched” where they turned, providing an insight into what the learner does, and how do the strategies used change over time. I also started wondering how it would be possible to set up a collaborative VR environment.

Besides visiting the smart lab, I also had the chance to meet Jeff Greene and his research team at Chapel Hill, where I could present my research and engage in interesting theoretical discussions. Thank you for the warm welcome!

I am very grateful to Roger and the whole team for making me feel part of the SMART family and for this learning opportunity! I am looking forward to possible future collaborations with the SMART team.

Besides visiting the smart lab, I also had the chance to meet Jeff Greene and his research team at Chapel Hill, where I could present my research and engage in interesting theoretical discussions. Thank you for the warm welcome!

I am very grateful to Roger and the whole team for making me feel part of the SMART family and for this learning opportunity! I am looking forward to possible future collaborations with the SMART team.

Author

Marta Sobocinski, doctoral student at LET

RSS Feed

RSS Feed